2. Understand Licorne¶

Licorne is a set of tools easing the handling of ground based measures. Actually, it focuses on Lidar data but will be able to handle data from different sources( GPS, radiosonde, model datas, etc).

General goals: - context/goal

2.1. Présentation¶

The framework is developed for Python 2 and 3. It is crafted to gather expertise from different fields and offers an easy and durable way to process data from instruments.

This flexible software can be used equally in automatic routine production and for research when you need to test different algorithms.

Prosessing goals: - facilities X...

2.2. Architecture overview¶

- Licorne allows to manipulate and process lidar data:

- by scientists to test, validate, and to easily propose new algorithms at different levels.

- by data managers to routine data production

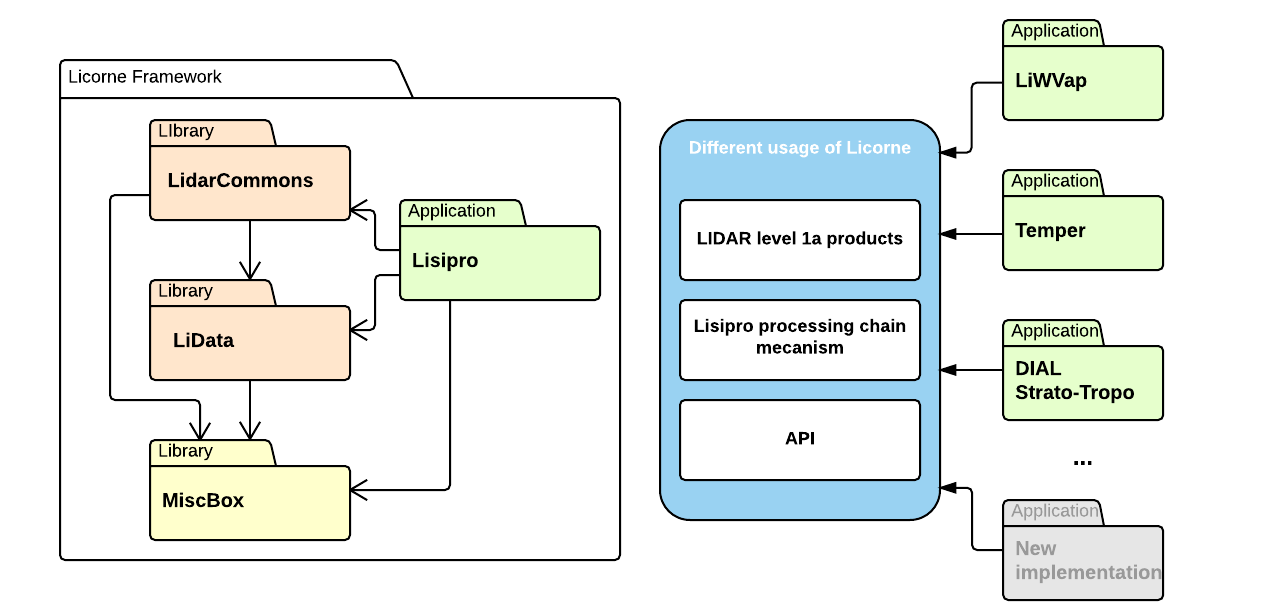

This framework factorizes different works about algorithm harmonization and data standardization, and tries to capitalize expert knowledge. It is proposing a new approach to develop robust and evolutive software for a community which has different and conflicting goals (specific study run, algorithm innovation, routine data production). Licorne is composed of 4 libraries each with its own focus.

Miscbox is a general-purpose library. More of a toolkit, this package will handle all tasks to ease the manipulation of the data files (FTP interface, XML handling …).

Lidata is the core of the framework. This library is here to put an abstraction on the geophysical scene you’re looking at. It is also capable of adapting itself to any kind of instruments by adjunction of reader and writer plugin.

LidarCommons gathers all algorithms necessary for the cleaning, the pre-processing or the retrieval. The main advantage provided is the ability to version implementation of an algorithm. In fact evolution, testing or production “almost always” happens at the same time. The convenience to include modification provided by this library is also a good vector for communication between developer, and scientist.

The last brick, Lisipro, is an application to make life of data manager and PI easier. It brings an abstraction layer to create lidar pipeline. Without specific knowledge of programming, you are able to process lidar signal using standards defined by the community and to produce interoperable data . It uses a simple command line interface to process XML files which describe the pipeline steps. Using the web interface, with small effort you can configure a pipeline, review the treatment done or simulate signals.

2.3. Different way to use Licorne depending on your goal¶

There are two main ways to play with Licorne, either you fire up your favorite text editor and build everything from scratch and use only the command, either you go the easy path and use the web interface.

It is also possible to get hands dirty and use the library in Python. This method allow you to be even more flexible and give you access to more powerfull functionality but it requires you to know how to do programming.

2.4. Understand what is the processing chain and how to build it¶

The processing chain is the set of instructions that Licorne have to execute. You can see this as a meta programming language. The list of instructions are executed sequentially. These instructions range from the simple one like loading files to more complex ones like retrieving a temperature profile from corrected lidar signal.

Internally, this processing chain is represented by a XML file and by the user interface by a tree. One instruction is embedded in a block. These blocks receives arguments and output results.

This processing chain is the link between high level action the framework has to do and the Python language.

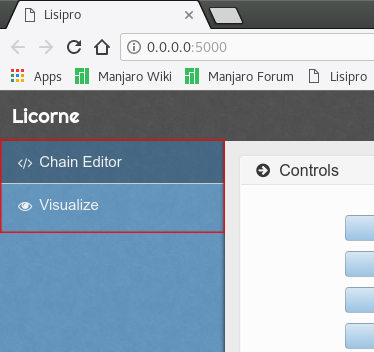

Using the GUI¶

Start by launching the GUI and access to the Chain Editor application using the menu on the left.

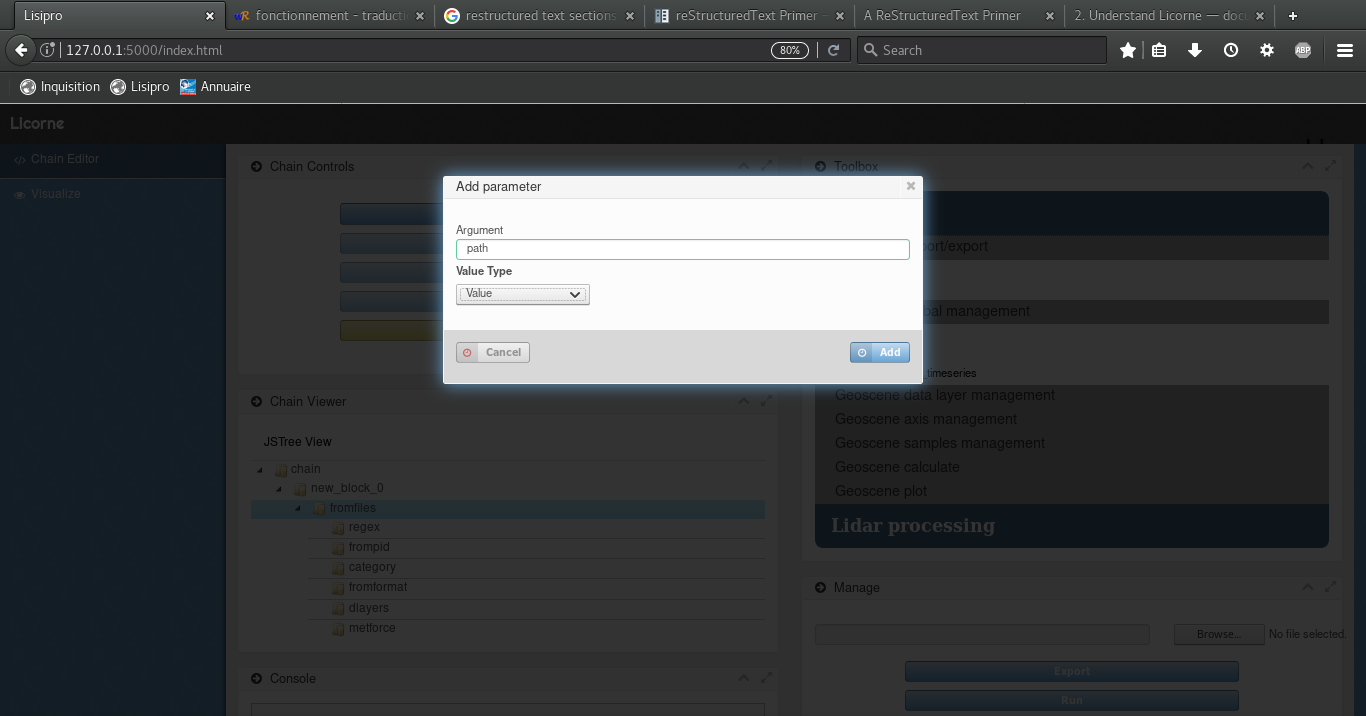

You have now 4 boxes that will help you to build you processing chain.

Chain Controls¶

The Chain Controls contains the action you can perform on the chain depending on the type of block you select.

Add button is used to add an input to a block. It will launch a pop-up asking you the name of the argument you want to add and the type of the argument.

- The valid types for an argument are :

- Value: simple value as a string, an integer or a float, a list.

- Dictionnary : a set of key value pair.

- Ordered Dictionnary: same as a dictionnary but the order the element are organised inside the dictionnary will be kept all the way.

- Returned Value: A value that you can’t write yourself and is expected from the output of another block.

Edit allows to change an input argument value that is not automatically given by the application. It you let it blank, it will be passed as is to application. It can be a source of error so be careful.

Remove suppresses an argument you don’t want to pass to the block. Be careful with this action, in fact you can easily suppress argument that are mandatory to operating the block.

Link to output is used to retrieve output from one block and give it as input to another one. On click, this button will launch a pop-up to ask the user from which block to take the output and more precisely which output to take. As a block can have several output, you have to give the index (zero-based) of the output you want to use.

Remove entire block will remove a black and all its parameters from the processing chain. All information in this block will be lost.

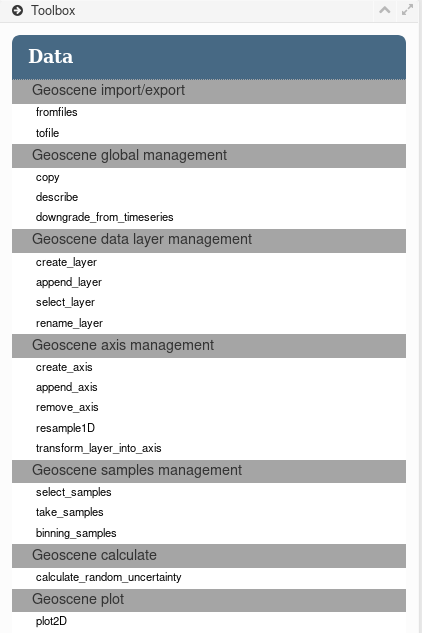

Toolbox¶

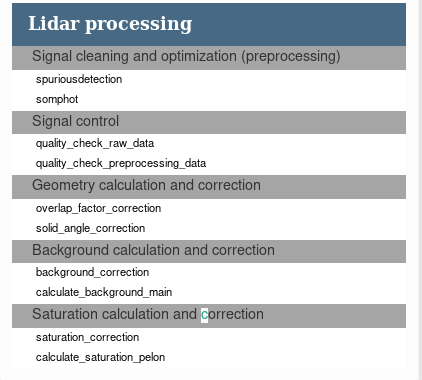

The Toolbox is a clickable folding menu where you can find all the different functions available in the framework. They are sorted by category. Actually you will find functions to manipulate geophisical data in the data section and specific algorithm to manipulate lidar signal in the Lidar processing section.

The lowest clickable level of this menu is draggable. You can drag elements from this menu to Chain Viewer box to build your processing chain.

Chain Viewer¶

This box contains an empty tree view when you start the application. The first block is the chain that will contains all the block you will drag & drop from the Toolbox menu.

Block elements in the tree view are sortable. You can organize them in the order you want. It is up to you, however, to verify the semantics of the different block you put the chain and how they should be organized.

Manage¶

The last box contains buttons for you to export the created processing chain to a xml file. This file is then given as input to lisipro command line to be executed.

Steps to build a processing chain¶

1 - After launching the web interface and accessing the Chain Editor application, unfold the different menus and sub-menus available in the Toolbox.

2 - Drag the desired functions from the Toolbox and drop them inside the Chain Viewer box. The dropped block will appear at the end of the processing chain. If it is not is final place in the chain, drag the block name to its final position.

3 - Unfold the different level of the previously dragged block and fill the different values using the button Edit or Link to output

4 - When you have filled all the required information in the processing chain for all the blocks, export it to a xml file using the Export button in the Manage box.

2.5. Licorne capabilities¶

Data processing¶

Geoscene import/export¶

Geoscene global management¶

-

licorne.lidata.gsctools.manage.copy()

Give a copy of the GeoScene object. Optional: you can use dlayers argument to select some layers or metforce to force metadata and/or select layers.

Parameters:

- gscobject (lidata.Geoscene): Geoscene to work on.

- dlayers (str`|`None): list of selected data layers (default None/all layers).

- metforce (str`|`collection.OrderedDict): XML file or XML-like dictionary containing

- same schema as data storage object.

Returns: (gsc.GeoScene) a new GeoScene object.

-

licorne.lidata.gsctools.manage.describe()

Display metadata, datainfo and gridinfo information and an overview of data and grid.

Parameters:

- gscobject (lidata.Geoscene): Geoscene to describe.

-

licorne.lidata.gsctools.manage.downgrade_from_timeseries()

Downgrade GeoScene from time series feature type. GeoScene time dimension must be equal to one.

param gscobject: Geoscene to work on. type gscobject: (licorne.lidata.Geoscene) param execute_binning: Binning all samples into one bin on time axis before remove time axis (default: do it - True). type execute_binning: bool param binning_statistic: Binning statistic used: ‘sum’ (default) or ‘mean’. type binning_statistic: str

Geoscene data layer management¶

-

licorne.lidata.gsctools.manage.create_layer()

Create a layer inside a geoscene.

Parameters:

- gscobject (lidata.Geoscene): Geoscene where the layer will be created.

- layername (str): data layer name.

- shape (tuple): data array shape

- data_type (numpy.dtype): data array type.

- feature_type (str): Feature type of the layer created.

- data_array (list`|`numpy.ndarray): Array containing the values

- axis_list (list): List of axis which the layers is tied to.

- fill_value (int`|`float): Value used to fill the array if necessary.

-

licorne.lidata.gsctools.manage.append_layer()

Copy and insert a data layer and datainfo layer from another GeoScene object.

Parameters:

- gscobject (lidata.Geoscene): Geoscene where the layer will be appended.

- layername (str): data layer name.

- gscobject_source (gsc.GeoScene): GeoScene object where the layer is originally located.

- rename (str`|`None): Name of the new layer into the GeoScene.

- Default is the same name as original name.

-

licorne.lidata.gsctools.manage.select_layer()

Select layers from a subset of expected datainfo keys and values and/or from a regex search on the layer’s name.

Parameters:

- gscobject (lidata.Geoscene): Geoscene from which layers will be selected.

- wanted_datainfo_values (dic): Subset of expected datainfo keys and values

- (default empty/not used).

- layer_regex (str): Optional regular expression for layer’s name selection (default None/not used).

- dlayer (list): Optional pre-selection layers’ name list (default []/all existing layers).

Returns: (str`|`list of str`|`None) Selected layers. If only one, string value is returned. If there is no layer selected, None is returned.

-

licorne.lidata.gsctools.manage.rename_layer()

Rename layer and automatically change value into datainfo fields containing layername (uncertainty fields). With change_unc_layer, you can automatically update the name of associated uncertainty layers.

Parameters:

- gscobject (lidata.Geoscene) Geoscene where a layer should be renamed.

- layername (str): Name of the layer to rename.

- rename (str): New name of the layer.

- change_unc_layer (bool): Automatically upadte the name of associated uncertainty layers.

Geoscene axis management¶

-

licorne.lidata.gsctools.manage.create_axis()

Create an new axis grid layer and gridinfo layer.

Parameters:

- gscobject (lidata.Geoscene): Geoscene in which an axis will be created.

- axisname (str): axis name.

- shape (tuple): data array shape. Only one dimension.

- data_type (numpy.dtype): data array type.

- sampling_type (str): axis sampling type (see iodata.SUPPORTED_SAMPLING_TYPE).

- cell_position (str): axis sample cell position (see iodata.SUPPORTED_CELL_POSITION).

- data_array (numpy.ndarray`|`None): you can optionnaly use an existing numpy.ndarray to insert

- into the data layer (default None).

- associated_layers (list`|`None): you can optionnaly set the list of associated data layers

- (default None/empty list). Warning: axis must be the LAST data dimension (append mode)

-

licorne.lidata.gsctools.manage.append_axis()

Copy and insert a data layer and datainfo layer from another GeoScene object.

Parameters:

- gscobject (lidata.Geoscene): Geoscene where the layer will be appended.

- layername (str): data layer name.

- gscobject_source (gsc.GeoScene): GeoScene object where the layer is originally located.

- rename (str`|`None): Name of the new layer into the GeoScene.

- Default is the same name as original name.

-

licorne.lidata.gsctools.manage.remove_axis()

- Remove an axis grid layer and gridinfo layer from GeoScene object.

Remove all datainfo axis reference to this axis too.

Parameters: - gscobject (lidata.Geoscene): Geoscene where an axis will be removed.

- axisname (str): axis name.

-

licorne.lidata.gsctools.manage.transform_layer_into_axis()

Move layer as axis.

Parameters:

- layername (str): layer name.

- use_reference_axis (str): if you want to move layer as a reference axis (T, Z, Y, X), you have to set the name of the reference axis (default None/not a reference axis).

- quasiregular_rate (float): limit rate to define a ‘quasiregular’ sampling type (default 0.05).

- cell_position (str): force cell position definition (default auto).

- rename (str): new name for axis (default None/layername used).

- add_axis_to_layers (list): list of layers into which you want to set new axis name into axis layer’s metadata (default empty list).

Geoscene samples management¶

-

licorne.lidata.gsctools.manage.select_samples()

Select samples from a given axis.

Parameters:

- axisname (str): axis name.

- a_value (in`|`float): first value which is looked for.

- b_value (in | float | None): second value which defines with a_value a range value which is looked for (default None/not used).

- delta (in | float | None): if b_value is not used, delta value could be use with a_value to define a range value which is looked for (default None/not used).

Results: (list) list of slected indices.

-

licorne.lidata.gsctools.manage.take_samples()

Select and keep samples from a mask layer (remove none selected samples).

Mask layer should have one dimension. If there is no selected samples from the mask (0 value everywhere) then an exception is emitted. The mask layer maskname is removed after. GeoScene metadata are adjusted (coverage, number of observations).

Parameters:

- gscobject (lidata.Geoscene): Geoscene from which to take samples.

- maskname (str): mask name.

-

licorne.lidata.gsctools.manage.binning_samples()

Binning samples along a given axis.

- Two binning mode available:

- with a given number of samples per bin to build (binsamples argument)

- with a given output bin resolution to build (binresolution)

Into binsamples mode, last bin representation is validated using binvalidity argument. If it is not correct then the last bin is removed. Into binresolution mode, all bins representation are validated using binvalidity argument considering the calculated maximum of samples per bin. Mask layer automatically uses min statistic (if one sample off = bin is off).

Parameters:

- gscboject (lidata.Geoscene): Geoscene where samples will be binned.

- axisname (str): axis name.

- binsamples (int`|`None): desired number of samples per bin (do not set it if you choose

- binresolution mode).

- binresolution (int`|`float`|`None): desired bin resolution

- (do not set it if you choose binsamples mode).

- binvalidity (float): bin validity rate considering the number of samples per bin

- (default 0.6/60% of maximum).

- statistic (str): binning statistic used, available: sum (default), mean, min, max or median.

Geoscene calculate¶

-

licorne.lidata.gsctools.manage.calculate_random_uncertainty()

Calculate random uncertainty from a data layer and create a nex uncertainty layer.

Parameters:

- gscobject (lidata.Geoscene): Geoscene where the random uncertainty will be calculated.

- target_layername (str): target data layer name.

- distribution (str): statistical distribution (default ‘poisson’/poisson distribution).

- midfix_outputlayer (str): midfix for uncertainty layer name (default ‘auto’/no midfix)

Geoscene plot¶

2.6. Geoscene: the high level data container and the data manager for licorne¶

What is it?¶

All data in licorne are stored into a common and flexible memory data storage model giving to us a standardized data container (see next chapter for details): Geoscene. You can easely find in it the data and the metadata throughout simple python dictionaries: ‘metadata’ for global metadata describing the represented dataset, ‘data’ for the data (with several data layers using standard Numpy n-dimensions arrays), ‘datainfo’ for metadata about each data layer (data description), ‘grid’ for axis and geographic reference values, and ‘gridinfo’ for metadata about grid data (axes description, etc). With licorne, all data are stored like this. So, the licorne’s processing functions are not surprised: it is always the same data organization in input or in output, a Geoscene (you will find in functions’ signature ‘gscobject’).

Of course, units or names of data parameters can be differents. It is the reason why geoscene is a self-described container: a licorne’s function can know and test units, names, axes, etc. Obviously, we have some conventions (less as possible) by data category when it is necessary (for example the licorne’s standard name or the units of a lidar raw signal, see conventions chapter part). We have quickly describing the data storage, but geoscene is more. It includes a read/write plugin system: your data is just a plugin identified by its category (lidar, radiosonde, model, etc) and an identifier (product identifier = pid). All plugins are developed regarding the same model. Technically, you can read a lidar data and write it into a radiosonde data file format! You can import and export data as you want and when you want. And more: geoscene is a data container with tools to control data, to select and extract a subset of data, and to transform data (you can play with resolution, etc). It is not the end: quite often, we have a lack of metadata information in our raw data file. No problem, licorne has got a mecanism to add external metadata during the import or the export process. It’s called metforce (see force the metadata chapter part). Last thing: Geoscene can import one or several data files in the same time: Geoscene can manage a dataset and it is particulary optimized to manage time series.

In conclusion, with Geoscene, we can make an abstraction on data management and be focused on data processing itself. And you can import what you want and export as you want.

Note for developpers: Geoscene is the high level data container which is based on a law level data container (iodata), and a data access model (ioaccess).

A common and flexible data storage model¶

Licorne’s common data storage object (common DSO)¶

It is the memory structure of the container: geoscene

- metadata [‘title’= string value, ‘featureType’= string value, ‘time_coverage_start’= datetime value, ‘number_of_observation’= integer value, ...]:

- data [‘layer1’: [numpy ndarray], ‘layer2’: [numpy ndarray], ...]

- datainfo[‘layer1’: {‘long_name’= string value, ‘units’= string value, ...}, ‘layer2’: {‘long_name’= string value, ‘units’= string value, ...}, ...]

- grid[‘axis’][‘T’: [numpy 1darray], ‘Z’: [numpy 1darray], ‘Y’: [numpy 1darray], ‘X’: [numpy 1darray], ...] ; grid[‘coordinate_reference_system’]= EPSG integer code value

- gridinfo[‘axis’][‘T’: {‘long_name’= string value, ‘units’= string value, ...}, ‘Z’: { }, ...] ; gridinfo[‘coordinate_reference_system’: {‘espag_code’= string value, ...}]

Geoscene help you to import, export, manage and standardly process the data. But, when you want, you can directly access to data values using classic numpy arrays to use them into your own python script. Ex:

Metadata and data layer names¶

Import/export capacities¶

Force the metadata¶

Sometimes we have a lack of metadata into the original data file, in this case you have to add these missing metadata into software parameters. Now its over: you can simply add or modify metadata during the import processing using metforce option. You can modify licorne’s standard metadata name and/or value, you can add a new metadata which you need. You can also do the same thing during the export processing: you have the capacity to update your output data structure to a new template.

Derived data storage object from the common DSO¶

Write my data plugin¶

Play with a GeoScene¶

Understand the limitations¶

More about axes¶

More about conventions¶

2.7. Algorithme évolutif¶

Afin d’assurer la pérennité du framework Licorne, les algorithmes implémentés sont dits évolutifs. Ces algorithmes permettent d’avoir plusieurs implémentations du même algorithme et de la configurer selon ses besoins.

Un algorithme évolutif se configure à l’aide de 3 paramètres. La <version> qui est le nom de la version de l’algorithme à utiliser.

La balise <param> contient tous les paramètres qui sont invariables au cours du temps. Ces paramètres sont la configuration qui permettent à l’algorithme de fonctionner.

Finalement, la balise <datain> contiendra toutes les variables que l’algorithme doit traiter.

2.8. Low level tools to manipulate files, filenames, paths, and to move your data files... and more¶

Where in the framework¶

You are in Miscellaneous Toolbox part (licorne.miscbox).

Play with filenames, file paths... looking for a date time... looking for into a tree directory¶

Read/write parameters file¶

Manipulate and exchange metadata or parameters using the licorne’s dic-XML DSO model¶

Put, get and re-organize data files¶

msend module allows you to send data files using ftp, local copy or email protocol. It allows you to send data files from a complex date-time tree directories organization (only ftp and local copy). And, in the same action, it allows you to change tree directories organization.

Data file sending is securized using a list, a shooping list. The process is: 0/ declare an existing shopping list or create one, 1/ list data files to send and write origin and destination into this shopping list (append mode), 2/ send listed data files and remove it only if transfer is done. See mdsl module to use shopping list model. Of course you can select data files with last modification date or with a regex. Local path input arguments support dynamic definition of date using YMD string pattern (see mstring).

If you want to get remote data files, you can use mget module which is organized as msend.

If you want to use these functionalities into a production flow, you can use licorne’s utils: licorne_sendmydata.py and licorne_getremotedata.py.